Microsoft Fabric: (Part 1 of 5)

An insight 42 Technical Deep Dive Series presents A Deep Dive into Azure’s Future of Cloud Data Platforms.

The Unending Quest for a Unified Data Platform

In the world of data, the only constant is change. For decades, organizations have been on a quest to find the perfect data architecture—a single, unified platform. It should handle everything from traditional business intelligence to the most demanding AI workloads. This journey has taken us from rigid, on-premises data warehouses to the flexible, but often chaotic, world of cloud data lakes. Each step in this evolution has solved old problems while introducing new ones. It leaves many to wonder if a truly unified platform was even possible.

This 5-part blog series will provide a deep and critical analysis of Microsoft Fabric, the latest and most ambitious attempt to solve this long-standing challenge. We will explore its architecture, its promises, its shortcomings, and its potential to reshape the future of data and analytics. In this first post, we will set the stage by examining the evolution of data platforms. Additionally, we will introduce the core concepts behind Microsoft Fabric.

A Brief History of Data Platforms: From Warehouses to Lakehouses

To understand the significance of Microsoft Fabric, we must first understand the history that led to its creation. The evolution of data platforms can be broadly categorized into distinct eras. Each era has its own set of technologies and architectural patterns.

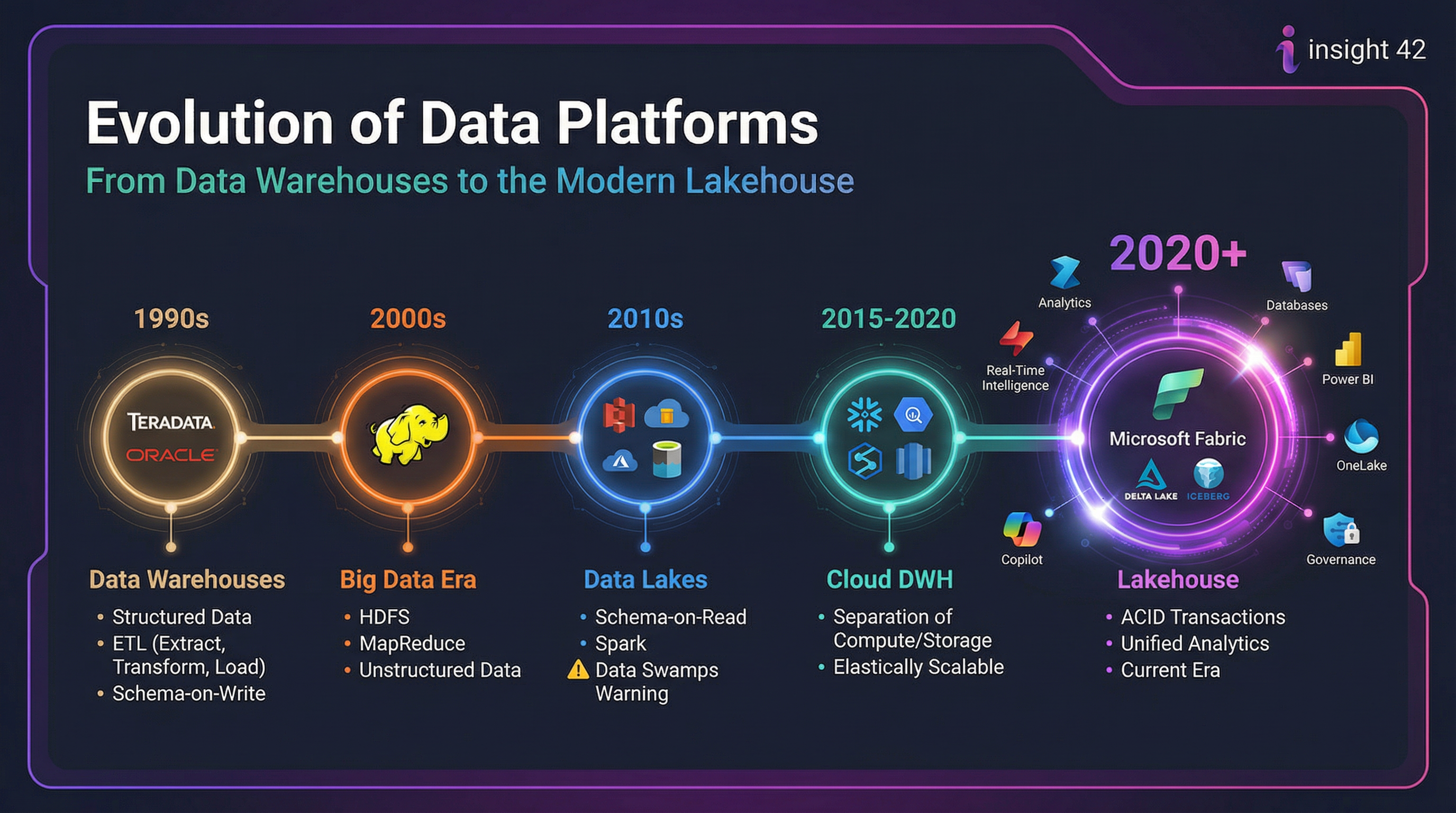

Figure 1: The evolution of data platforms, from traditional data warehouses to the modern lakehouse architecture.

The Era of the Data Warehouse

In the 1990s, the data warehouse emerged as the dominant architecture for business intelligence and reporting [1]. These systems, pioneered by companies like Teradata and Oracle, were designed to store and analyze large volumes of structured data. The core principle was schema-on-write, where data was cleaned, transformed, and loaded into a predefined schema before it could be queried. This approach provided excellent performance and data quality but was inflexible and expensive. This was especially true when dealing with the explosion of unstructured and semi-structured data from the web.

The Rise of the Data Lake

The 2010s saw the rise of the data lake, a new architectural pattern designed to handle massive volumes and variety of data. Modern applications generated this data. Built on cloud storage services like Amazon S3 and Azure Data Lake Storage (ADLS), data lakes embraced a schema-on-read approach. This allowed raw data to be stored in its native format and processed on demand [2]. This provided immense flexibility but often led to “data swamps.” These are poorly managed data lakes with little to no governance. They make it difficult to find, trust, and use the data within them.

The Lakehouse: The Best of Both Worlds?

In recent years, the lakehouse architecture has emerged as a hybrid approach. It aims to combine the best of both worlds. It takes the performance and data management capabilities of the data warehouse with the flexibility and low-cost storage of the data lake [3]. Technologies like Delta Lake and Apache Iceberg bring ACID transactions and schema enforcement. Other data warehousing features are added to the data lake. This makes it possible to build reliable and performant analytics platforms on open data formats.

Introducing Microsoft Fabric: The Next Step in the Evolution

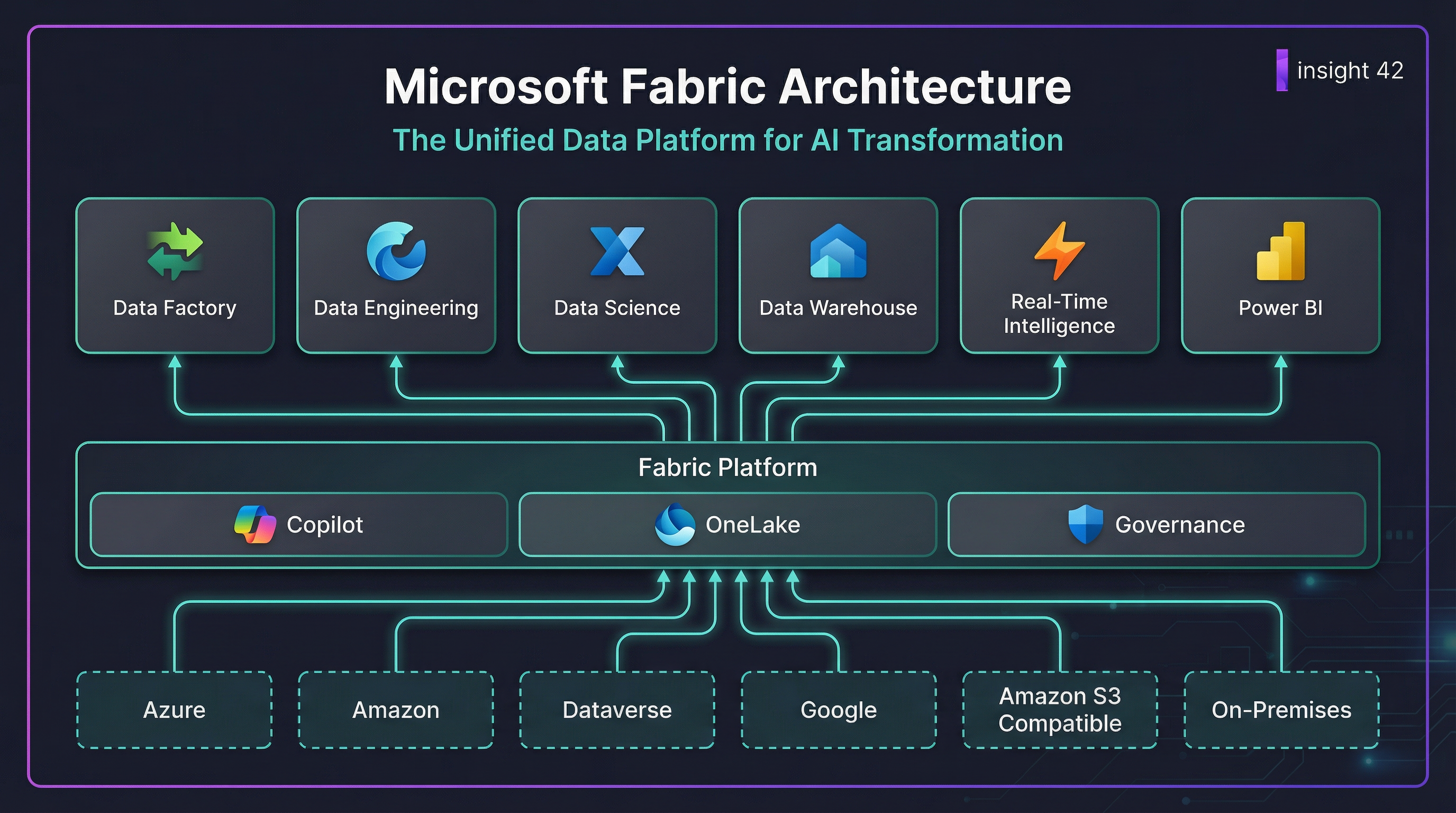

Microsoft Fabric represents the next logical step. In this evolutionary journey, it is not just another data platform. It is a complete, end-to-end analytics solution delivered as a software-as-a-service (SaaS) offering. Fabric integrates a suite of familiar and new tools into a single, unified experience. These tools include Data Factory, Synapse Analytics, and Power BI. All are built around a central data lake called OneLake [4].

Figure 2: The high-level architecture of Microsoft Fabric, showing the unified experiences, platform layer, and OneLake storage.

The Core Principles of Fabric

Microsoft Fabric is built on several key principles that differentiate it from previous generations of data platforms:

| Principle | Description |

|---|---|

| Unified Experience | Fabric provides a single, integrated environment for all data and analytics workloads. It supports data engineering, data science, business intelligence, and real-time analytics. |

| OneLake | At the heart of Fabric is OneLake, a single, unified data lake for the entire organization. All Fabric workloads and experiences are natively integrated with OneLake, eliminating data silos. This reduces data movement. |

| Open Data Formats | OneLake is built on top of Azure Data Lake Storage Gen2. It uses open data formats like Delta and Parquet, ensuring that you are not locked into a proprietary format. |

| SaaS Foundation | Fabric is a fully managed SaaS offering. This means that Microsoft handles infrastructure, maintenance, and updates, allowing you to focus on delivering data value. |

The Promise of Fabric

The vision behind Microsoft Fabric is to create a single, cohesive platform serving all the data and analytics needs of an organization. By unifying the various tools and services that were previously separate, Fabric aims to:

- Simplify the data landscape: Reduce the complexity of building and managing modern data platforms.

- Break down data silos: Provide a single source of truth for all data in the organization.

- Empower all users: Enable everyone from data engineers to business analysts to collaborate and innovate on a single platform.

- Accelerate time to value: Reduce the time and effort required to build and deploy new data and analytics solutions.

What’s Next in This Series

While the vision for Microsoft Fabric is compelling, the reality of implementing and using it in a complex enterprise environment is far from simple. In the upcoming posts in this series, we will take a critical look at various aspects of Fabric. This includes:

| Part | Title | Focus |

|---|---|---|

| Part 2 | Data Lakes and DWH Architecture in the Fabric Era | Medallion architecture, lakehouse patterns, data modeling |

| Part 3 | Security, Compliance, and Network Separation Challenges | Security layers, compliance, network isolation limitations |

| Part 4 | Multi-Tenant Architecture, Licensing, and Practical Solutions | Workspace patterns, F SKU licensing, cost optimization |

| Part 5 | Future Trajectory, Shortcuts to Hyperscalers, and the Hub Vision | Cross-cloud integration, future roadmap, universal hub concept |

Join us as we continue this deep dive into Microsoft Fabric. We will separate the hype from the reality. Our goal is to provide you with the insights needed to navigate the future of cloud data platforms.

References

This article is part of the Microsoft Fabric Deep Dive series by insight 42. Continue to Part 2: Data Lakes and DWH Architecture →

#MicrosoftFabric #UnifiedDataPlatform #CloudDataPlatforms #DataLakehouse #FabricDeepDive #DataArchitecture #OneLake #DataPlatform #DataEngineering #BusinessIntelligence #SaaSData #DataSilos #FabricImplementation #CloudDataStrategy #DataAnalytics